Google

Key Points

- Glass Explorer’s Program is as much a field test for the hardware as it is an effort to discover how developers & users will apply this new technology.

- Early demos demonstrate potential for augmented reality applications, but the hardware required for useful capability is likely at least another two generations away.

- Glass camera offers a unique perspective for content creation & delivery.

- Ideal for displaying information that can be organized into cards. Cards could have additional drill-down multimedia content including photos, audio, and video.

- Potential to communicate urgent messages.

The Device

Glass is a head-mounted display, with processor, battery, & prism lens over the right eye. To interact with the device, you use voice commands combined with touch-input along the right frame. The current iteration has optional lens inserts, and with recent updates, it can now work with a range of prescription lenses.

Glass has built-in WiFi connectivity for data. Configuration and application installation is handled through the MyGlass companion application, either on the web or on a mobile device. Glass requires a Google account.

When WiFi is not available, Glass can use Bluetooth tethering with an Android or iOS device. When connected via Bluetooth to a device running the MyGlass application, Glass will use location information from the Smartphone, and can be used to send and receive SMS text messages. Glass will also function as a Bluetooth headset, although audio quality is limited when not using the optional earbud (included). Without the earbud, Glass uses a bone conduction transducer.

Battery life is challenging. With all sensors enabled, moderate use resulted in approximately four hours of usable life. With wink detection, on-head detection, and head-tilt detection disabled, moderate use resulted in a full business day (nine hours) of usable life. Capturing, video, video conferencing, and Augmented Reality features can significantly reduce battery life.

The Experience

When activated, the Glass HUD appears to float about an arm’s length ahead of the wearer in the upper right corner of their vision. Google documentation states the “display is the equivalent of a 25 inch high definition screen from eight feet away.” It is semi-transparent, so you can see objects behind the HUD, but when capturing images or video, focus is drawn to the camera’s full field of view.

The default interface, when activated, displays the time and activation phrase (“OK Glass”). After speaking the keywords, the user is presented with a menu of activities, based on the GlassWare applications installed on the device.

Some commands work in off-line mode (eg: take a picture, record a video, make a call to…), while others require data connectivity to process the command. Any command with a complex speech-to-text requirement uses a data connection to process the conversion.

Pictures and video are synced to the Google cloud, but the device can be used for capture when offline. Glass has 16GB of storage, with roughly 12GB usable.

Augmented Reality Demo: Word Lens

One of the early GlassWare applications, Word Lens, is a proof-of-concept Augmented Reality app that translates text you're looking at into your native language, in real-time.

Focus on the words you’d like translated (first image), and Word Lens will auto-zoom and translate in context (second image).

The translation engine isn't perfect, and it’s clearly demanding a lot out of the hardware, but as a demonstration of possible AR applications, it is a good indicator of what could be possible in a future iteration of the hardware.

Purpose-built AR applications could, for example, help surgeons prepare by overlaying procedural steps or key data with real-world images:

(mock-up)

(mock-up)

Other uses:

- Overlay labelled wireframes over real-world images to aide techs when troubleshooting devices

- Display key information in difficult environments, eg: push performance alerts to supervisors on the factory floor.

- Medical transcription – Although largely replaced with electronic notes, doctors in some scenarios (particularly surgical) still dictate notes for transcription. Glass could capture and upload audio directly.

- Plant inspections –With location information, a 5MP camera, 720p video capture and the ability to add annotations/captions to photos, Glass could streamline data collection for plant inspections and similar activities.s

Google Now Integration

For existing Android users, Google Now may already be a familiar experience. Google Now learns your habits and scans your Gmail in an attempt to present search results before you ask for them.

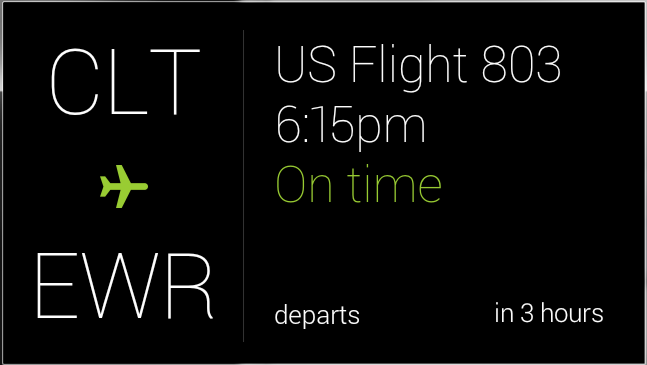

For example, if you’ve booked a flight and sent the confirmation to your Gmail account, Google Now will parse the itinerary, warn you when you need to leave for the airport, give you the status of your flight, and even tell you the weather in your destination. Glass can present data from Google Now out-of-the-box, and the card-based presentation format is ideally suited to the Glass interface.

The screen captures to the right resulted from a travel itinerary that included Charlotte, NC. On the weather screen, the user’s current location (Raritan) takes priority, followed by the travel destination (Charlotte) and the weather at home (Flemington).

While the itinerary is active, Google Now regularly updates the status of the flights. By tapping the top-level flight screen, the user is able to see additional details such as the boarding gate, without needing to actively search email or flight trackers for the additional information.

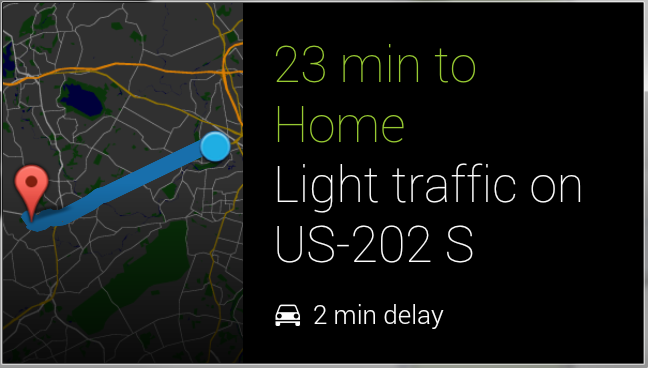

Google Now also learns your commuting patterns. At the beginning and end of the work day, it displays an overview of a user’s route to work or home, and warns of any delays along the way. By tapping on this card, Glass presents the option to initiate turn-by-turn navigation.